How to Use the OpenAI SDK with Puter

On this page

In this tutorial, you'll learn how to use the OpenAI SDK with Puter. Puter now exposes an OpenAI-compatible endpoint, which means any tool, library, or framework that works with the OpenAI API also works with Puter, out of the box.

Prerequisites

- A Puter account

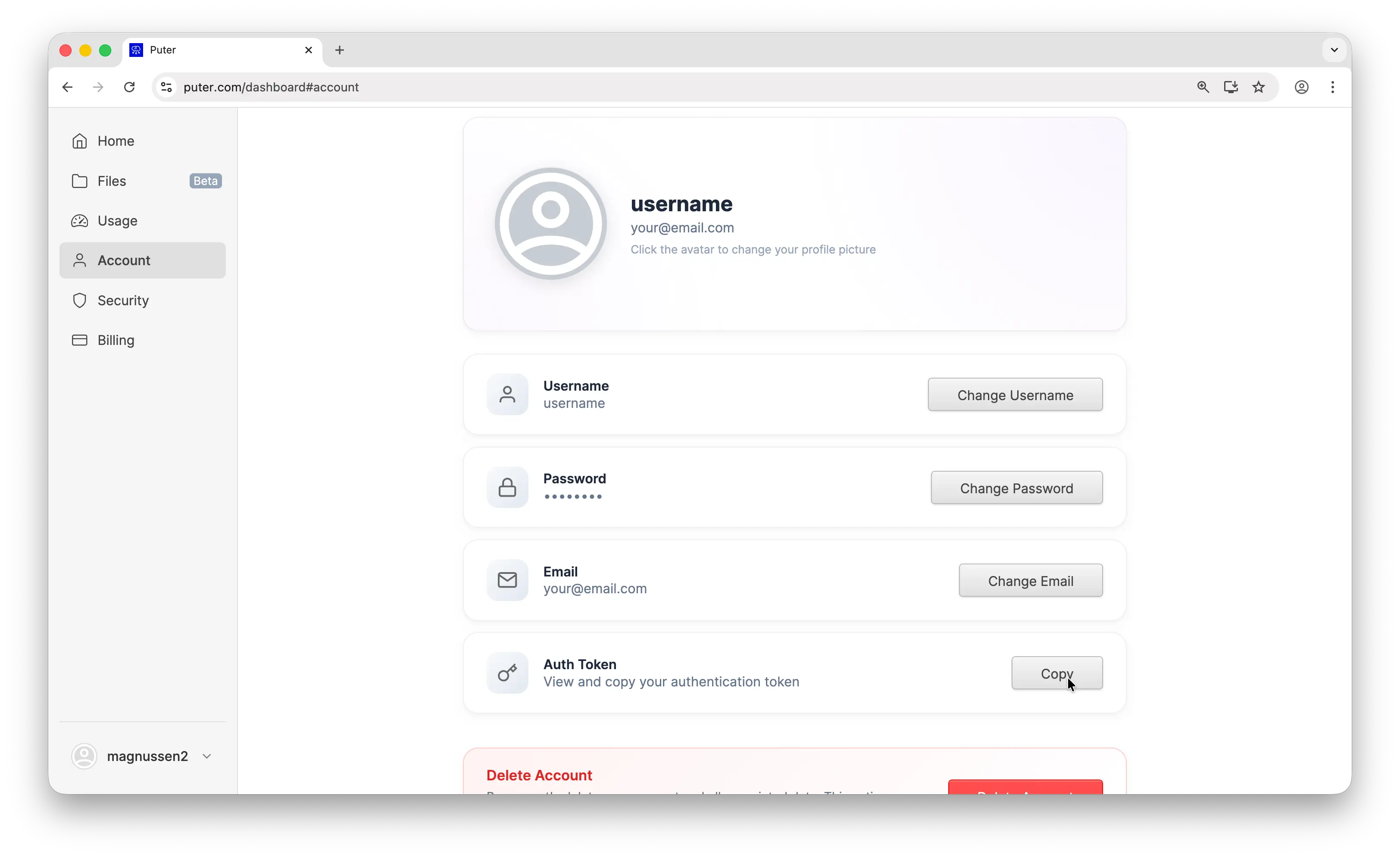

- Your Puter auth token, go to puter.com/dashboard and click Copy to get your auth token

- Node.js installed on your machine

Setup

Install the OpenAI SDK:

npm install openai

Then configure the client with Puter's base URL and your auth token:

import OpenAI from "openai";

const client = new OpenAI({

baseURL: "https://api.puter.com/puterai/openai/v1/",

apiKey: "YOUR_PUTER_AUTH_TOKEN",

});

Replace YOUR_PUTER_AUTH_TOKEN with the auth token you copied from your Puter dashboard. That's all you need to start making requests.

Example 1: Basic Chat Completion

Let's start with the simplest possible example, a single chat completion:

import OpenAI from "openai";

const client = new OpenAI({

baseURL: "https://api.puter.com/puterai/openai/v1/",

apiKey: "YOUR_PUTER_AUTH_TOKEN",

});

const response = await client.chat.completions.create({

model: "gpt-5-nano",

messages: [

{ role: "user", content: "What is the capital of France?" },

],

});

console.log(response.choices[0].message.content);

This sends a single message to gpt-5-nano and prints the response. The API is identical to what you'd use with OpenAI directly. The only difference is the base URL and auth token.

Example 2: Streaming

For longer responses, streaming gives you results in real-time as they're generated:

import OpenAI from "openai";

const client = new OpenAI({

baseURL: "https://api.puter.com/puterai/openai/v1/",

apiKey: "YOUR_PUTER_AUTH_TOKEN",

});

const stream = await client.chat.completions.create({

model: "gpt-5-nano",

messages: [

{ role: "user", content: "Write a short story about a robot learning to paint." },

],

stream: true,

});

for await (const chunk of stream) {

const content = chunk.choices[0]?.delta?.content;

if (content) {

process.stdout.write(content);

}

}

Set stream: true and iterate over the chunks as they arrive. Each chunk contains a piece of the response that you can display immediately.

Example 3: Use a Non-OpenAI Model

This is where it gets interesting. Same code, same endpoint. Just swap the model parameter to use Claude, Gemini, Grok, or any other supported model:

import OpenAI from "openai";

const client = new OpenAI({

baseURL: "https://api.puter.com/puterai/openai/v1/",

apiKey: "YOUR_PUTER_AUTH_TOKEN",

});

// Use Claude

const claude = await client.chat.completions.create({

model: "claude-sonnet-4-5",

messages: [

{ role: "user", content: "What is the capital of France?" },

],

});

console.log("Claude:", claude.choices[0].message.content);

import OpenAI from "openai";

const client = new OpenAI({

baseURL: "https://api.puter.com/puterai/openai/v1/",

apiKey: "YOUR_PUTER_AUTH_TOKEN",

});

// Use Claude

const claude = await client.chat.completions.create({

model: "claude-sonnet-4-5",

messages: [

{ role: "user", content: "What is the capital of France?" },

],

});

console.log("Claude:", claude.choices[0].message.content);import OpenAI from "openai";

const client = new OpenAI({

baseURL: "https://api.puter.com/puterai/openai/v1/",

apiKey: "YOUR_PUTER_AUTH_TOKEN",

});

// Use Claude

const claude = await client.chat.completions.create({

model: "claude-sonnet-4-5",

messages: [

{ role: "user", content: "What is the capital of France?" },

],

});

console.log("Claude:", claude.choices[0].message.content);

// Use Gemini

const gemini = await client.chat.completions.create({

model: "gemini-2.5-flash-lite",

messages: [

{ role: "user", content: "What is the capital of France?" },

],

});

console.log("Gemini:", gemini.choices[0].message.content);

// Use Grok

const grok = await client.chat.completions.create({

model: "grok-4-1-fast",

messages: [

{ role: "user", content: "What is the capital of France?" },

],

});

console.log("Grok:", grok.choices[0].message.content);

One endpoint, any model. You don't need separate SDKs, separate API keys, or separate billing accounts. Switch between providers by changing a single string.

Example 4: Tool/Function Calling

Function calling lets the model request structured data from your code. Here's an example with a simple get_weather tool:

import OpenAI from "openai";

const client = new OpenAI({

baseURL: "https://api.puter.com/puterai/openai/v1/",

apiKey: "YOUR_PUTER_AUTH_TOKEN",

});

// Define the tool

const tools = [

{

type: "function",

function: {

name: "get_weather",

description: "Get the current weather for a given location",

parameters: {

import OpenAI from "openai";

const client = new OpenAI({

baseURL: "https://api.puter.com/puterai/openai/v1/",

apiKey: "YOUR_PUTER_AUTH_TOKEN",

});

// Define the tool

const tools = [

{

type: "function",

function: {

name: "get_weather",

description: "Get the current weather for a given location",

parameters: {import OpenAI from "openai";

const client = new OpenAI({

baseURL: "https://api.puter.com/puterai/openai/v1/",

apiKey: "YOUR_PUTER_AUTH_TOKEN",

});

// Define the tool

const tools = [

{

type: "function",

function: {

name: "get_weather",

description: "Get the current weather for a given location",

parameters: {

type: "object",

properties: {

location: {

type: "string",

description: "City name, e.g. San Francisco",

},

},

required: ["location"],

},

},

},

];

// Send the request with tools

const response = await client.chat.completions.create({

model: "gpt-5-nano",

messages: [

{ role: "user", content: "What's the weather like in Tokyo?" },

],

tools: tools,

});

// Handle the tool call

const toolCall = response.choices[0].message.tool_calls?.[0];

if (toolCall) {

const args = JSON.parse(toolCall.function.arguments);

console.log(`Model wants to call: ${toolCall.function.name}`);

console.log(`With arguments:`, args);

// Simulate a tool response

const toolResult = JSON.stringify({ temperature: "22°C", condition: "Partly cloudy" });

// Send the tool result back to the model

const finalResponse = await client.chat.completions.create({

model: "gpt-5-nano",

messages: [

{ role: "user", content: "What's the weather like in Tokyo?" },

response.choices[0].message,

{

role: "tool",

tool_call_id: toolCall.id,

content: toolResult,

},

],

tools: tools,

});

console.log(finalResponse.choices[0].message.content);

}

The model analyzes the user's question, decides it needs weather data, and returns a structured tool call. Your code executes the function, sends the result back, and the model generates a final response using that data.

Example 5: cURL

If you prefer raw HTTP requests or want to use the API from any language, here's the same chat completion as a cURL command:

curl https://api.puter.com/puterai/openai/v1/chat/completions \

-H "Content-Type: application/json" \

-H "Authorization: Bearer YOUR_PUTER_AUTH_TOKEN" \

-d '{

"model": "gpt-5-nano",

"messages": [

{"role": "user", "content": "Hello, how are you?"}

]

}'

The API follows the OpenAI spec, so any HTTP client that works with OpenAI will work here. Just change the base URL and use your Puter auth token as the Bearer token.

Conclusion

That's it. You now have a single endpoint that gives you access to GPT, Claude, Gemini, Grok, and more, all through the familiar OpenAI SDK. No need to juggle multiple API keys or rewrite your code when you want to try a different model.

To go further, check out the full Puter.js documentation or browse the complete list of supported AI models. You can also learn more about the Puter.js AI API for additional features like vision, text-to-speech, and image generation.

Related

- Access Claude Using OpenAI-Compatible API

- Access Gemini Using OpenAI-Compatible API

- Access Grok Using OpenAI-Compatible API

- Access Llama Using OpenAI-Compatible API

- Access DeepSeek Using OpenAI-Compatible API

- Access Mistral Using OpenAI-Compatible API

- Access Perplexity Using OpenAI-Compatible API

- Access Qwen Using OpenAI-Compatible API

- Access MiniMax Using OpenAI-Compatible API

- Access Kimi Using OpenAI-Compatible API

- Access GLM Using OpenAI-Compatible API

- Access Cohere Using OpenAI-Compatible API

- Free, Unlimited OpenAI API

- Free, Unlimited Claude API

- Free, Unlimited Gemini API

- Free, Unlimited Grok API

- Free, Unlimited AI API

- Getting Started with Puter.js

Free, Serverless AI and Cloud

Start creating powerful web applications with Puter.js in seconds!

Get Started Now